I'm usually a lurker, but I saw Proctor’s post about VRoid and since I’m a completely 3D ‘Tuber myself (Self made models), I felt inspired to make an account to post about the state of 3D VTubing, since it’s something I’ve been dicking around with these past few months

Really this is just a big overview briefly explaining the requirements for 3D VTubing, how to get an ok looking model as a poor/richfag and the software/hardware that makes it possible to VTube in 3D without looking like hot ass. I'll try no to go too in depth on any one thing.

IMPORTANT: There are a few things here I don’t personally have experience testing out. Tell me if I’m misinformed, I don’t use all this software, just telling you what I know.

(sorry if my formatting sucks)

(30,000 being really good/optimized, 60,000 being pretty good, and anything above 100,000 being a LOT for this use case. For reference, my model is around 63k tris.)

I know this sounds like gibberish, but remember: Low tri good because more room for shader and vidya in your hardware.

All this is to say: if your PC is potato, just buy a pre-made Live2D model.

For the lads with shitty setups, VRoid models have lower poly counts, and only the hair you draw on/choose is really going to increase that, so they take up less processing power.

However, making a VRoid model that doesn’t look like hot garbage takes a bit of effort, with drawing on anime hair and all, and knowledge of how to texture 3D models is a must. (If you’re not super duper poor, you can buy some textures/hair/clothing assets for VRoid on BOOTH and Frankenstein a model out of that.)

The biggest drawback to VRoid is that they all kinda have a ‘style,’ like you can see when a model was made with it. Plus, the facial expressions all look kinda goofy (more on that later), and the shading is subpar, so you might have to improve it (more on that later, too.).

Tutorial that’s pretty good to get started (A male one because c’mon, the only women here are chuubas and feds):

^ this person’s account also has more videos on texturing and otherwise making good-looking VRoid models.

^This one also has videos about modelling her own VTuber models with Blender.

Edit: a fellow user let me know that VRoid uses large image sizes for each texture (2048×2048) having several of which will take up extra processing power.

A good rule to remember with texture size is that it should coincide with the size of the model it textures. For example it would be useless to have a 4k textured necklace, since nobody will be looking that closely.

To optimise this, you can compress the textures, especially for smaller/less important areas of the model (shoes,accessories, etc).

Obviously not the cheapest option, but it’s the path of least resistance. BOOTH is probably the best place for this.

Drawbacks are little to no male models beside femboys, few customization options, and the creator might impose petty usage rules/forbid streaming with the model, so make sure you find one you can use how you’d like to.

Not much to say for this one, all the work is done for you.

Just including this as it’s a possibility. Any oil barons lurking here with a couple thousands to spare, there are a few English speaking 3D modelers that offer VTuber commissions. Not sure how they’d feel being linked here, so I won’t link any. Most of the trustworthy ones advertise their services on Twitter and VGen and have larger followings. If you find some SEA dweller on Fiverr to make you a model for 100 bucks, remember, you get what you pay for.

Drawbacks are obviously the cost, you’ll have to have a full reference sheet (Front and side views at least), and you might have to wait a few months for the modeler to finish the commission.

Keep in mind if you take this route:

You are interacting with Twitter artists, and I don’t know any of them personally, so I have no clue if they’re retarded or not.

If one calls you a disgusting thief for sending an AI generated reference, or writes a call-out post about you for asking them a question without proper usage of tone indicators and being trans-misogyny-coded or whatever: they are Twitter users, pls understand.

Highest effort option. Probably the most rewarding, but if you're a newbie to it, it’s quite daunting, frustrating, and time intensive.

You’ll want to use Blender if you’re new to 3D. It’s free, not specialized to any one facet of the modelling process, and not that anger inducing to use. Most big 3D artists use it in the VTuber sphere, but if you’re new, then there’s a lot to take in, so make sure you orientate yourself with some Blender beginner tutorials and learn the layout.

This part I can write the most about, but for brevity’s sake, I’ll post a couple tutorials for the visual learners here.

^ These are older tutorials, but they helped me starting out.

^ How to texture.

Texturing is extremely important with VTuber models, since they’re not like ray traced or anything. It's pretty much a make or break. Make sure it looks good even before you add a cool shader setup in Unity. I can’t teach you this with words on a page, and artistic skill/a drawing tablet helps, so keep going until it looks good. The undo button is right there if you fuck it up.

^ How 2 Export your Blender model file to a VRM for use in most VTubing applications.

Personal advice:

Go into it knowing what you want the finished result to be, and do it in parts: e.g. model the face → body → hair → clothes, unwrap the face → body → hair → clothes, then rig, then texture, and so on. Keep all the parts separate until the model is pretty much done, and keep backups every step. Don’t overwhelm yourself, it’s difficult to get into if you don’t understand wtf is going on. Watch timelapses, or streamers making models live, look at their processes.

Also use references, especially with the face. AI generated references work pretty well if you can get it to generate a front/side view image of the same character. (There are extensions/addons to the AI that allow you to do this, here: https://civitai.com/models/3036/charturner-character-turnaround-helper-for-15-and-21 and this one gives you a VTuber-like full body reference from the front: https://civitai.com/models/55504/character-a-poses-vtuber-reference-pose-fullbody-halfbody. There are also addons that let you generate images in a certain artist’s style, so you can have your own stylized reference to use instead of the usual AI output look.)

The face is the most important part, so make sure it looks good at every angle. You can push and pull the mesh in Blender’s sculpt mode pretty much infinitely, so do that until it looks good. I know it seems like I’m being vague here, but you mostly learn by doing/seeing with 3D, I can’t walk you through it with words.

This part is only really important if you're DIYing it with VRoid or Blender!!

Actually using Unity is the 3D software equivalent of giving yourself AIDS, but unfortunately, all the software 3D VTubers use is based on it.

Your shader setup can be as important or as unimportant as you want, depends on what you’re trying to achieve.

Using any shader besides MToon requires converting your model from .vrm format to a. vsfavatar. There are a lotta benefits to doing this, but keep your .vrm version on hand, outside VNyan and VSeeFace, the vsfavatar format isn’t used.

Vsfavatar also allows you to import custom animations onto the model, so it’s just generally useful to have your model in this format if you’re using VSeeFace or VNyan.

^ Decent starter.

^ This one is way more in depth and explains the expanded shader capabilities with Poiyomi

Shaders available to you:

Poyomi Toon: Your best bet. The lower tier of the shader is free, and you likely won’t need the paid version. I’ve not run into any real limitations so far.

I can list how many things you can do better over MToon using Poiyomi, but it took too long, so I’ll link its documentation.

https://www.poiyomi.com/

Short overview:

Drawbacks: Requires you to join a discord for support.

LilToon: Apparently comparable with Poiyomi. I’ve heard it’s better with environmental lighting, but I haven’t used it. (Anyone with experience here?)

https://github.com/lilxyzw/lilToon/releases

Personal tip:

For the love of god, give the model outlines (and use outline masks for the bad looking outlines). Black outlines can look good for some styles, but don’t most of the time. Use a similar color to your model, but darker.

This form of tracking utilizes 52 blendshapes (facial expressions) to form probably the most accurate tracking of your face available to us plebeians. You need to set up your model specifically to use it, though:

Preparing a model for ARKit Tracking:

VRoid model havers:

HANATool (paid). (https://booth.pm/en/items/2604269)

(This is how you get rid of VRoid’s goofy facial expressions.)

It costs money, but you can probably find it for free somewhere. IDK I don’t use it, but pretty much every VRoid user I’ve seen does.

Tutorial:

Other model havers: You have to make the expressions yourself. It’s not too hard, you do them like any other shapekey in Blender.

If you have no clue about how to do this, or are super bad at it, there are 2 addons to automate this a little (you’ll still have to fix it up after) :

FaceIT (paid): (Good if you can acquire it for free. Shit for anime eyes, so you’ll have to make the shapekeys yourself, but mouth shapes are alright.)

Tut:

Blender Facial MoCap Blendshape - GENERATOR (free): Looks solid, but you have to rig the face with something like rigify already, so it’s too much work for a brainlet who just wants anime face expression tracking.

Tut:

ARKit requires an iPhone X or better, and iPhone tracking is still better than the ARKit alternatives on other devices. If you’re looking for absolute top of the line tracking, your best bet is buying a used/refurbished iPhone X for a couple hundred bucks. (just make sure the infrared sensor/FaceID works, as that’s all you need)

Androidfags have their own ARKit in the form of MeowFace (made by the guy developing VNyan.) However, Google did something, and I heard it barely works anymore (haven’t tested it), so RIP I guess.

The best alternatives right now are RTX webcam tracking (apparently pretty GPU intensive, and requires a modern Nvidia GPU) and Media Pipe webcam tracking (also uses GPU, not too heavy from my testing, bear in mind I’m using an RTX3070)

RTX tracking is built into VTube Studio at least, and some others, I think.

Media Pipe requires its own application* (XR Animator), and is also capable of hand and full body tracking (more on this later).

*apparently XR Animator is very anal about how the 52 blendshapes are capitalized in Unity, so the model won’t track if you get it wrong. Use all lowercase in the blendshape names.

Drawbacks for the alternatives are that they are generally less accurate, and most do not support the ‘cheekpuff’ and ‘tongueout’ blendshapes.

You have 2 main apps that are worth a shit nowadays:

VNyan:

The all round best one. Has a lot of options with tracking to make that 3D model a little less 3D-looking.

Drawback is that all support is done through the dev’s stream discord, but they make good tutorials/explanation videos on how to use the software on YT.

Playlist:

VSeeFace:

Imagine VNyan, but with fewer features.

Drawbacks are that it lacks some really important features that VNyan has, so not a popular choice anymore. Gotta be thankful for the VSeeFace SDK being a thing, though, else we’d still be stuck with default MToon shading for our models.

Luppet, etc. are ass comparatively and some cost money, don’t bother.

You’ve got 2 options.

Leap Motion:

Little box that tracks your hand movements. Best worn on a dedicated necklace or shirt clip-on holder from personal experience, but you can suspend it pretty much anywhere, desk, monitor, head, wherever.

Supposed to be top of the line outside dedicated mocap gloves, but the range of view for the camera is pretty shit, and the tracking can be janky in practice.

The main positive point is that the hardware is doing the tracking, so it’s light on processing power.

XR Animator:

Media Pipe webcam tracking. Has an option to track body and hands. Pretty good, if not a little jittery. It’s AI, so it utilizes the GPU, but it’s surprisingly light for what it is. (I can run it on an integrated AMD card, and will put it at around 50% utilization)

If you’re not already a massive VRChat e-sex enthusiast, you won’t have the hardware for the higher quality tracking, and probably won’t have the money for the more expensive Vive/Rokoko tracking.

You still have options for that full body 3D stream, however. These can be jank as hell, but if it works, it works.

Just to start: VTubing software, at the time of writing this, all require motion capture to be sent through VMC protocol, meaning a lot of full body trackers require extra software/wrangling to get working how you want. Generally, a program called Virtual Motion Capture (paid) is used to send tracking data from physical trackers to your VTubing software of choice.

Poverty setup:

XR Animator/ThreeDPoseTracker:

Both do webcam based full body tracking. You need to be in a well lit environment, and your full body has to be visible to the camera.

Both can be jittery and janky. I’ve seen a VRChat mocap smoother somewhere, but it’s probably not compatible with what we are doing here, so I won’t include it.

Both ThreeDPoseTracker and XR Animator support sending VMC data natively, so it’s pretty much as easy as pressing a button and making sure your VMC receiver is set to receive from the correct port.

Tut (it’s for VSeeFace, but it works pretty much the same in VNyan):

Not so poverty setup:

HaritoraX: $299 base price + $99 for elbow/arm tracking

https://en.shiftall.net/products/haritorax

A bit jittery, you’ll need to use VMC to send the tracking data and there isn’t much documentation for doing so, but looks solid and won’t require base stations like Vive.

US/JP Only (cry)

SlimeVR: $295 (full body)

https://www.crowdsupply.com/slimevr/slimevr-full-body-tracker#products

Looks similar in quality to HaritoraX, but I’ve heard it has a lot of issues, and I’m not even sure if they’ve delivered any of the prebuilt trackers or if the DIY ones are the only ones shipping currently. Either way, looks decent quality, might be worth it. Same as Haritora, you’ll need to use VMC to send the tracking data, and there is not much documentation for this either.

(I heard natively sending VMC tracking is in the works for this one too, so it’s probably the best for VTubing.)

Oil baron setup:

HTC Vive tracking

Rokoko full body mocap suit.

Both are very expensive.

I am not rich, nor am I having e-sex on VRChat, so I can’t offer much insight here on how these non-poverty options work, aside from the fact they have to be wrangled in some way to send VMC tracking data to your software. IDK man, work it out.

Tut (it’s for VSeeFace, but it works pretty much the same in VNyan):

I’d say the most important thing to make sure your model looks good BEFORE you add any shader or do any VNyan Live2D-lite fuckery. Pay special attention to your texture work, your model will likely be cell-shaded/anime like, so the texture looking good is pretty much essential. Shaders can’t polish a turd.

Be careful with webcam based tracking, It’s usually one click away from doxing your face. I’ve heard some software are set up in a way that they can never show the camera feed on screen, but you can’t trust them all. Just don’t fiddle with your tracking live, if possible.

You will have to molest your firewall to allow these apps to communicate, typically enabling inbound UDP connections to the software will suffice. It’s often trial and error, however.

Thanks for coming to my TED Talk. If anything's confusing, I can clear it up.

Important reminder:

View attachment ywnbal2d.mp3

Really this is just a big overview briefly explaining the requirements for 3D VTubing, how to get an ok looking model as a poor/richfag and the software/hardware that makes it possible to VTube in 3D without looking like hot ass. I'll try no to go too in depth on any one thing.

IMPORTANT: There are a few things here I don’t personally have experience testing out. Tell me if I’m misinformed, I don’t use all this software, just telling you what I know.

(sorry if my formatting sucks)

Part 1: The Models

Before we start: Many models you can buy will be made up of anywhere between 30,000 to 120,000 tris, and may have a lot of physics/spring bones to calculate, so some can be pretty heavy to run, even before tracking software and cool shaders.(30,000 being really good/optimized, 60,000 being pretty good, and anything above 100,000 being a LOT for this use case. For reference, my model is around 63k tris.)

I know this sounds like gibberish, but remember: Low tri good because more room for shader and vidya in your hardware.

All this is to say: if your PC is potato, just buy a pre-made Live2D model.

VRoid:

VRoid is a great poverty option. It’s free, the models are free to edit, and you don’t have to follow any gay usage rules (as far as I know.)For the lads with shitty setups, VRoid models have lower poly counts, and only the hair you draw on/choose is really going to increase that, so they take up less processing power.

However, making a VRoid model that doesn’t look like hot garbage takes a bit of effort, with drawing on anime hair and all, and knowledge of how to texture 3D models is a must. (If you’re not super duper poor, you can buy some textures/hair/clothing assets for VRoid on BOOTH and Frankenstein a model out of that.)

The biggest drawback to VRoid is that they all kinda have a ‘style,’ like you can see when a model was made with it. Plus, the facial expressions all look kinda goofy (more on that later), and the shading is subpar, so you might have to improve it (more on that later, too.).

Tutorial that’s pretty good to get started (A male one because c’mon, the only women here are chuubas and feds):

^ this person’s account also has more videos on texturing and otherwise making good-looking VRoid models.

^This one also has videos about modelling her own VTuber models with Blender.

Edit: a fellow user let me know that VRoid uses large image sizes for each texture (2048×2048) having several of which will take up extra processing power.

A good rule to remember with texture size is that it should coincide with the size of the model it textures. For example it would be useless to have a 4k textured necklace, since nobody will be looking that closely.

To optimise this, you can compress the textures, especially for smaller/less important areas of the model (shoes,accessories, etc).

Buying a Premade Model

Obviously not the cheapest option, but it’s the path of least resistance. BOOTH is probably the best place for this.

Drawbacks are little to no male models beside femboys, few customization options, and the creator might impose petty usage rules/forbid streaming with the model, so make sure you find one you can use how you’d like to.

Not much to say for this one, all the work is done for you.

Commission Someone:

Just including this as it’s a possibility. Any oil barons lurking here with a couple thousands to spare, there are a few English speaking 3D modelers that offer VTuber commissions. Not sure how they’d feel being linked here, so I won’t link any. Most of the trustworthy ones advertise their services on Twitter and VGen and have larger followings. If you find some SEA dweller on Fiverr to make you a model for 100 bucks, remember, you get what you pay for.

Drawbacks are obviously the cost, you’ll have to have a full reference sheet (Front and side views at least), and you might have to wait a few months for the modeler to finish the commission.

Keep in mind if you take this route:

You are interacting with Twitter artists, and I don’t know any of them personally, so I have no clue if they’re retarded or not.

If one calls you a disgusting thief for sending an AI generated reference, or writes a call-out post about you for asking them a question without proper usage of tone indicators and being trans-misogyny-coded or whatever: they are Twitter users, pls understand.

Modelling it yourself:

Highest effort option. Probably the most rewarding, but if you're a newbie to it, it’s quite daunting, frustrating, and time intensive.

You’ll want to use Blender if you’re new to 3D. It’s free, not specialized to any one facet of the modelling process, and not that anger inducing to use. Most big 3D artists use it in the VTuber sphere, but if you’re new, then there’s a lot to take in, so make sure you orientate yourself with some Blender beginner tutorials and learn the layout.

This part I can write the most about, but for brevity’s sake, I’ll post a couple tutorials for the visual learners here.

^ These are older tutorials, but they helped me starting out.

^ How to texture.

Texturing is extremely important with VTuber models, since they’re not like ray traced or anything. It's pretty much a make or break. Make sure it looks good even before you add a cool shader setup in Unity. I can’t teach you this with words on a page, and artistic skill/a drawing tablet helps, so keep going until it looks good. The undo button is right there if you fuck it up.

^ How 2 Export your Blender model file to a VRM for use in most VTubing applications.

Personal advice:

Go into it knowing what you want the finished result to be, and do it in parts: e.g. model the face → body → hair → clothes, unwrap the face → body → hair → clothes, then rig, then texture, and so on. Keep all the parts separate until the model is pretty much done, and keep backups every step. Don’t overwhelm yourself, it’s difficult to get into if you don’t understand wtf is going on. Watch timelapses, or streamers making models live, look at their processes.

Also use references, especially with the face. AI generated references work pretty well if you can get it to generate a front/side view image of the same character. (There are extensions/addons to the AI that allow you to do this, here: https://civitai.com/models/3036/charturner-character-turnaround-helper-for-15-and-21 and this one gives you a VTuber-like full body reference from the front: https://civitai.com/models/55504/character-a-poses-vtuber-reference-pose-fullbody-halfbody. There are also addons that let you generate images in a certain artist’s style, so you can have your own stylized reference to use instead of the usual AI output look.)

The face is the most important part, so make sure it looks good at every angle. You can push and pull the mesh in Blender’s sculpt mode pretty much infinitely, so do that until it looks good. I know it seems like I’m being vague here, but you mostly learn by doing/seeing with 3D, I can’t walk you through it with words.

Part 2: The Shaders

This part is only really important if you're DIYing it with VRoid or Blender!!

Actually using Unity is the 3D software equivalent of giving yourself AIDS, but unfortunately, all the software 3D VTubers use is based on it.

Your shader setup can be as important or as unimportant as you want, depends on what you’re trying to achieve.

Using any shader besides MToon requires converting your model from .vrm format to a. vsfavatar. There are a lotta benefits to doing this, but keep your .vrm version on hand, outside VNyan and VSeeFace, the vsfavatar format isn’t used.

Vsfavatar also allows you to import custom animations onto the model, so it’s just generally useful to have your model in this format if you’re using VSeeFace or VNyan.

^ Decent starter.

^ This one is way more in depth and explains the expanded shader capabilities with Poiyomi

Shaders available to you:

Poyomi Toon: Your best bet. The lower tier of the shader is free, and you likely won’t need the paid version. I’ve not run into any real limitations so far.

I can list how many things you can do better over MToon using Poiyomi, but it took too long, so I’ll link its documentation.

https://www.poiyomi.com/

Short overview:

- Better control over shadows,

- Stencils allow eyebrows to show through hair like in anime/Live2D,

- Dissolve effects, allowing you to remove part of an outfit with a toggle,

- Adding GIFs to your model’s texture,

- Audiolink support (so your outfit is reactive to the sound on stream, for example),

- Better rim lighting,

- Matcaps, so glasses can have an anime shine for example.

Drawbacks: Requires you to join a discord for support.

LilToon: Apparently comparable with Poiyomi. I’ve heard it’s better with environmental lighting, but I haven’t used it. (Anyone with experience here?)

https://github.com/lilxyzw/lilToon/releases

Personal tip:

For the love of god, give the model outlines (and use outline masks for the bad looking outlines). Black outlines can look good for some styles, but don’t most of the time. Use a similar color to your model, but darker.

Part 3: The Tech

This is a big one.ARKit:

ARKit is the gold standard of face tracking for VTubers, 2D or 3D, assume your oshi uses it.This form of tracking utilizes 52 blendshapes (facial expressions) to form probably the most accurate tracking of your face available to us plebeians. You need to set up your model specifically to use it, though:

Preparing a model for ARKit Tracking:

VRoid model havers:

HANATool (paid). (https://booth.pm/en/items/2604269)

(This is how you get rid of VRoid’s goofy facial expressions.)

It costs money, but you can probably find it for free somewhere. IDK I don’t use it, but pretty much every VRoid user I’ve seen does.

Tutorial:

Other model havers: You have to make the expressions yourself. It’s not too hard, you do them like any other shapekey in Blender.

If you have no clue about how to do this, or are super bad at it, there are 2 addons to automate this a little (you’ll still have to fix it up after) :

FaceIT (paid): (Good if you can acquire it for free. Shit for anime eyes, so you’ll have to make the shapekeys yourself, but mouth shapes are alright.)

Tut:

Blender Facial MoCap Blendshape - GENERATOR (free): Looks solid, but you have to rig the face with something like rigify already, so it’s too much work for a brainlet who just wants anime face expression tracking.

Tut:

ARKit without handing *pple money (directly):

ARKit requires an iPhone X or better, and iPhone tracking is still better than the ARKit alternatives on other devices. If you’re looking for absolute top of the line tracking, your best bet is buying a used/refurbished iPhone X for a couple hundred bucks. (just make sure the infrared sensor/FaceID works, as that’s all you need)

Androidfags have their own ARKit in the form of MeowFace (made by the guy developing VNyan.) However, Google did something, and I heard it barely works anymore (haven’t tested it), so RIP I guess.

The best alternatives right now are RTX webcam tracking (apparently pretty GPU intensive, and requires a modern Nvidia GPU) and Media Pipe webcam tracking (also uses GPU, not too heavy from my testing, bear in mind I’m using an RTX3070)

RTX tracking is built into VTube Studio at least, and some others, I think.

Media Pipe requires its own application* (XR Animator), and is also capable of hand and full body tracking (more on this later).

*apparently XR Animator is very anal about how the 52 blendshapes are capitalized in Unity, so the model won’t track if you get it wrong. Use all lowercase in the blendshape names.

Drawbacks for the alternatives are that they are generally less accurate, and most do not support the ‘cheekpuff’ and ‘tongueout’ blendshapes.

VTuber Software:

You have 2 main apps that are worth a shit nowadays:

VNyan:

The all round best one. Has a lot of options with tracking to make that 3D model a little less 3D-looking.

- Supports face tracking with webcam.

- Supports ARKit Tracking through webcam or iPhone.

- Supports using Leap Motion hand tracking.

- Supports VMC input from XRAnimator, or other full body tracking software.

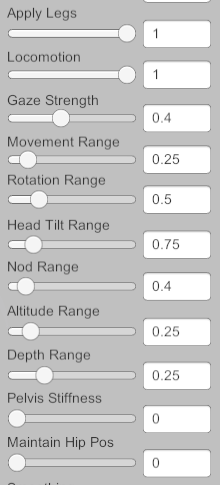

- Pendulum chains for eye physics, arm and finger sway, and restraints for tracking rotation and movement to keep a Live2D like effect, etc. (I might write a seperate post about this part, it's very important)

- Also has a lot of viewer integration. Viewers can throw/spawn things if you enable it (to the point it can get fucking obnoxious.)

Drawback is that all support is done through the dev’s stream discord, but they make good tutorials/explanation videos on how to use the software on YT.

Playlist:

VSeeFace:

Imagine VNyan, but with fewer features.

- Supports face tracking with webcam.

- Supports ARKit Tracking through webcam or iPhone.

- Supports using Leap Motion hand tracking.

- Supports VMC input from XRAnimator, or other full body tracking software.

Drawbacks are that it lacks some really important features that VNyan has, so not a popular choice anymore. Gotta be thankful for the VSeeFace SDK being a thing, though, else we’d still be stuck with default MToon shading for our models.

Luppet, etc. are ass comparatively and some cost money, don’t bother.

Hand Tracking:

You’ve got 2 options.

Leap Motion:

Little box that tracks your hand movements. Best worn on a dedicated necklace or shirt clip-on holder from personal experience, but you can suspend it pretty much anywhere, desk, monitor, head, wherever.

Supposed to be top of the line outside dedicated mocap gloves, but the range of view for the camera is pretty shit, and the tracking can be janky in practice.

The main positive point is that the hardware is doing the tracking, so it’s light on processing power.

XR Animator:

Media Pipe webcam tracking. Has an option to track body and hands. Pretty good, if not a little jittery. It’s AI, so it utilizes the GPU, but it’s surprisingly light for what it is. (I can run it on an integrated AMD card, and will put it at around 50% utilization)

Full Body Tracking:

If you’re not already a massive VRChat e-sex enthusiast, you won’t have the hardware for the higher quality tracking, and probably won’t have the money for the more expensive Vive/Rokoko tracking.

You still have options for that full body 3D stream, however. These can be jank as hell, but if it works, it works.

Just to start: VTubing software, at the time of writing this, all require motion capture to be sent through VMC protocol, meaning a lot of full body trackers require extra software/wrangling to get working how you want. Generally, a program called Virtual Motion Capture (paid) is used to send tracking data from physical trackers to your VTubing software of choice.

Poverty setup:

XR Animator/ThreeDPoseTracker:

Both do webcam based full body tracking. You need to be in a well lit environment, and your full body has to be visible to the camera.

Both can be jittery and janky. I’ve seen a VRChat mocap smoother somewhere, but it’s probably not compatible with what we are doing here, so I won’t include it.

Both ThreeDPoseTracker and XR Animator support sending VMC data natively, so it’s pretty much as easy as pressing a button and making sure your VMC receiver is set to receive from the correct port.

Tut (it’s for VSeeFace, but it works pretty much the same in VNyan):

Not so poverty setup:

HaritoraX: $299 base price + $99 for elbow/arm tracking

https://en.shiftall.net/products/haritorax

A bit jittery, you’ll need to use VMC to send the tracking data and there isn’t much documentation for doing so, but looks solid and won’t require base stations like Vive.

US/JP Only (cry)

SlimeVR: $295 (full body)

https://www.crowdsupply.com/slimevr/slimevr-full-body-tracker#products

Looks similar in quality to HaritoraX, but I’ve heard it has a lot of issues, and I’m not even sure if they’ve delivered any of the prebuilt trackers or if the DIY ones are the only ones shipping currently. Either way, looks decent quality, might be worth it. Same as Haritora, you’ll need to use VMC to send the tracking data, and there is not much documentation for this either.

(I heard natively sending VMC tracking is in the works for this one too, so it’s probably the best for VTubing.)

Oil baron setup:

HTC Vive tracking

Rokoko full body mocap suit.

Both are very expensive.

I am not rich, nor am I having e-sex on VRChat, so I can’t offer much insight here on how these non-poverty options work, aside from the fact they have to be wrangled in some way to send VMC tracking data to your software. IDK man, work it out.

Tut (it’s for VSeeFace, but it works pretty much the same in VNyan):

Final Notes:

I’d say the most important thing to make sure your model looks good BEFORE you add any shader or do any VNyan Live2D-lite fuckery. Pay special attention to your texture work, your model will likely be cell-shaded/anime like, so the texture looking good is pretty much essential. Shaders can’t polish a turd.

Be careful with webcam based tracking, It’s usually one click away from doxing your face. I’ve heard some software are set up in a way that they can never show the camera feed on screen, but you can’t trust them all. Just don’t fiddle with your tracking live, if possible.

You will have to molest your firewall to allow these apps to communicate, typically enabling inbound UDP connections to the software will suffice. It’s often trial and error, however.

Thanks for coming to my TED Talk. If anything's confusing, I can clear it up.

Important reminder:

View attachment ywnbal2d.mp3

Last edited:

.

.